This series features interviews with people building at the forefront of AI. If you enjoyed this conversation, who should I interview next?

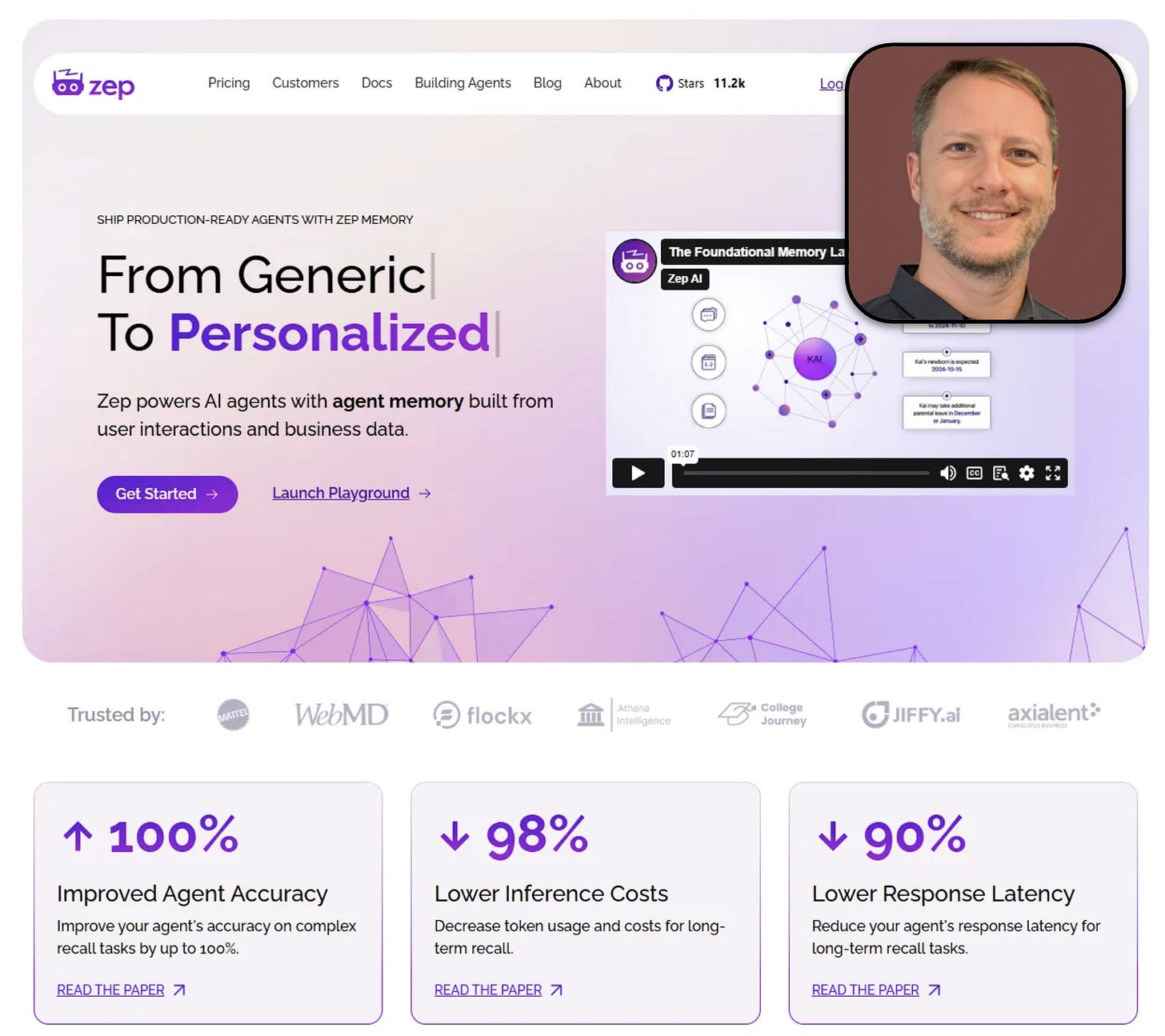

In this interview, I speak with Zep’s CEO, Daniel Chalef. Zep, built on top of Graphiti, powers AI agents with knowledge graph memory built from user interactions and business data. I first came across Zep more than a year ago. I was looking for a memory solution beyond vectors and they stood out as the only one considering the temporal nature of data. Graphiti is now one of the fastest growing open source projects — check it out.

Key learnings

AI memory can't be generic—it has to understand your business: Daniel learned that memory systems need to be domain-specific because a mental health app tracking emotional states and therapeutic progress has completely different memory requirements than an e-commerce agent remembering purchase patterns. The entities and relationships that matter are fundamentally different across domains. Generic memory solutions fail because they treat all context as equivalent.

Customer intimacy beats feature completeness in AI: While most B2B companies focus on features, Daniel focuses on customer intimacy—the agent's ability to respond accurately and personally. This drives conversion and retention because generic or hallucinated responses erode trust. For AI products, trust is the entire game, and personalization is how you build it.

User preferences have expiration dates that embeddings can't track: Vector databases treat information as immutable, but real user data changes and conflicts over time. When someone says "I love Adidas shoes" but later "these Adidas shoes fell apart," temporal knowledge graphs can invalidate the old preference while vectors just store both as independent facts. Time-aware context is crucial for AI products.

What is Zep?

Kenn So: I reached out because I'm a fan of what you're building at Zep. Super appreciate you taking the time. I was looking through LinkedIn — you wore so many different hats, which is very interesting. I'll poke around that during the interview. To get started, can you share about yourself, the company, and the open source project?

Daniel Chalef: So I'm an engineer turned founder. Zep is my second startup. It's also my second open source startup. In between the two startups, I took on many different roles—led very product-focused ML and engineering groups, as well as marketing, as well as corporate development teams.

Interesting set of experiences. The through line to my career has been I've always worked on very technical products that were being sold to technical buyers—engineers, data science leaders, ML leaders, etc.

Zep is near and dear to my heart because it's actually an agglomeration of a bunch of my experiences across both ML as well as some of my marketing experiences. This will become apparent as I unpack the vision for Zep and where we're going.

You can think of Zep as a memory layer for the agent stack. We think more expansively around what memory is and how we can help our customers.

Memory—we need it because without memory, we can't adequately personalize responses for an agent attempting to solve a problem for a user or respond to a user. If they don't have that context, they don't have the personalized context, then they're going to hallucinate at worst or at best respond generically.

And that doesn't do what's important for our customers, which is solve problems and drive customer intimacy. We work with a lot of companies who really view customer intimacy as a key to building a successful agent.

What I mean by customer intimacy is that the agent is able to respond accurately in a personalized way. Why intimacy is important is because it drives conversion rates and drives retention. Because if an agent responds generically or hallucinates, that erodes customer trust, which is the antithesis of customer intimacy.

We primarily work with B2C companies, many at scale. You can see why there's that intersection now with customer intimacy. Everybody from consumer mental health apps all the way through to coaching apps, through to e-commerce, through to—we're even working with, we keep it relatively generic, big consumer goods companies.

Where Zep is different is that we view memory as being expansive. The types of companies that we work with have many touch points for the user beyond just the agent. So there might be email, support cases, billing data, data coming from SaaS applications, so actual events—a user did X or a user did Y.

We allow developers to send us a stream of both chat messages and business data. We call what I just described this broader context—we call it business data.

We then integrate it together on a knowledge graph. It's not just any knowledge graph, it's a temporal knowledge graph. So we're able to understand how user state changes over time. Preferences might change over time, and there might be conflicting information that we later receive that we then have to integrate into the graph.

By using the temporal metadata that we capture, we're able to correctly integrate conflicting information into the graph. For example, invalidate an old preference:

I love Adidas shoes, but because my shoes fell apart, I might be angry. So Zep understands the emotional valence, understands the context, understands the temporal dimension, and is able to say, oh, that old preference is now invalid.

We essentially view Zep as more broadly than just memory. It's a customer data layer for the agent stack.

When we think about memory, we also have a very strong perspective that memory is not one-size-fits-all. Memory is very much a domain-specific implementation.

The types of things that you care about as a developer of a mental health application are going to be very different to the types of things you care about as an e-commerce agent developer.

So we allow developers to define custom domain-specific entities and edges in the Zep graph. They can capture specific things—things like media preferences or shoe preferences and retrieve those more deterministically from the graph with higher data quality and higher accuracy.

How it all started

Kenn So: There's a lot to dig in there. I saw the entity types blog post, which was great. But I want to take a step back into maybe the beginning of Zep as well. From LinkedIn, I saw you started March 2023. Walk me through that. Those were really early days post-ChatGPT.

Daniel Chalef: It was crazy because it was just after the seminal agent paper. When I started really thinking about this was before we called agents "agents."

I'd been using language models back to BERT and BART and others back in 2017, 2018. I'd been thinking about them for a long time and independently thought that we could be building something that could work autonomously.

I started to build an application that would take boring old policy and process documents and turn them into living and breathing—not only, it was during the vogue of the time of GPT-3.5, and there were all the chat over documents applications, Q&A over docs, and I was like, oh, come on, there's no defensibility here.

I wanted to build something that was far more compelling—an agent that would actually take and consume documents and turn them into a process.

There's no state. These applications, the models were stateless. I would have to determine what goes into a prompt. Prompts were small. The token windows were very small then, like 4,000 tokens. I had to work out how to structure that, and I built something that would basically summarize conversations, and I open-sourced it, created LangChain connectors for it, did a bunch of work with LangChain, and actually became a top 10 contributor.

That's how I got to memory—thinking about memory—at least. As I started thinking about the opportunities for what we would be building, I started realizing that there are all sorts of—given my experience with ML and at scale—there are all sorts of pipelines that we need to build to make memory into something and then retrieve memory. Memory shouldn't just be the conversational domain. My application is emitting data as well. So what do I do with that?

How do I contextually recall the right data? I can deterministically pull in data from a Postgres database. But sometimes I don't know what data I should pull in and when. So that non-deterministic part was—the things I don't know at development time and situations that I can't anticipate.

That is where agentic behavior really came into the fore and where having a memory service that allowed agents to recall both past interactions and business data was really useful. One thing led to another, got into YC, raised capital, built a service, a multi-tenant service.

People started using the product like you and started getting a lot of enterprise inbound as well. So we have tons of pilots now with large enterprises and, yeah, it's been exciting.

Why graphs beat vectors for memory

Kenn So: Going a little bit technical, there's this philosophical debate around knowledge graph versus vectors and knowledge graph that was even in the early days of 2023. Was the knowledge graph structure something that you honed in early on?

Daniel Chalef: We knew pretty early on that it was going to be very difficult to capture enough context, temporal and relational context, in a vector database. Vector databases, embedding vectors, exist independent of each other and are, in many respects, immutable. There's no way that you can understand causal relationships between vectors in a vector database.

We initially started with vector search, but knew that it was inadequate and attempted to work out ways that we could understand causal relationships, understand temporality at more depth. Having worked with graph databases in the past and graphs at scale, I knew that the intersection of graph and semantic would be a very powerful approach to memory.

What Zep does today, by virtue of leveraging Graphiti, is it uses both BM25 and Semantic Search to find subgraphs within the broader user graph or user record, and then uses traversal to retrieve relevant recent memory, so that an agent can then reason over the causal and temporal nature of memory that it is presented with, which allows agents to do things like root cause analysis, because not only are they getting, "I can't log in," oh, their user's account is suspended, but they're also getting, "we couldn't charge the credit card."

And why couldn't we charge a credit card? Because the credit card is expired. And you can get that with the graph, because you can look at the relationships.

Kenn So: The thing that drew me to Zep is how you have productionized knowledge graphs for AI. The graph construction , you can kind of hack it together, but building the pipeline to constantly update the knowledge graph is hard. And that's something you’ve built.

Daniel Chalef: Yes. And it was fun building it. We decided to build the heart of Zep as open source, the Graphiti graph framework. We knew it had broader applicability. So we have users building sales intelligence companies. Why? Because mapping companies, the relationships between companies, the employees of companies, how their roles change over time, how they might move to a different company is really useful on—a temporal knowledge graph is really useful for that use case.

Teaching customers to use the right tool

Kenn So: Well, let's go down this thread of technical products. Do you find your customers using Zep along with a more traditional database of storing all the chats and pull them both together? Or do you find them just pretty much sticking with Zep to feed all the contextual information into a model?

Daniel Chalef: We have customers who attempt to overuse Zep. They want to use it for relational data. They want to use it for high-frequency event data. They want to use it for RAG. And, you know, we're often telling them, if the data structure is known at development time, if the data itself is known at development time, and there are simple pipelines to update that data, and it is something that is either an immutable data structure, or is an append-only log of sorts, use a relational database.

You can more deterministically retrieve your data from a relational database, and it doesn't need to be on a graph.

Just because graphs are cool, doesn't mean you need to use a graph for everything. Ditto, we have customers who try and do RAG with Zep, and it's not designed for that when you need to recall the original chunks of your data. Zep can do that, but it's not really designed for that. It's probably better for you to use any number of RAG databases if your data is not dynamic, i.e. it never changes. Then you might as well just vectorize it and put it into a vector database. So we spend a lot of time educating customers.

When open source goes viral

Kenn So: It's super interesting. There are two topics I want to move onto. One is, it seems like Graphiti is on a rocket ship. What's happening there? And how are you keeping up with it?

Daniel Chalef: Oh, man. We're not keeping up with it. There are way more issues than we can handle. It's been challenging.

I think it's just captured imagination. You get into, with an open source project, you sometimes get into a strong virtuous cycle. Somebody might build something cool. And other people think it's really cool and it goes viral on X or wherever and lots of people star the repo, then other people write about it and you might get onto Hacker News or something and you get even more stars.

So you become a project of the day and the more people find out about it because Github emails everybody saying this is the project of the day and then you might stay up on project of the day over an entire weekend and then other influencers might hear about it and then they build things with it and you just get into a positive cycle around a project. And then it gets to a certain size where it's got some gravity to it, and it just keeps rolling.

Kenn So: It does. Now it has 11K stars. Has that translated to enterprise business pipeline?

Daniel Chalef: It has. We have some very large companies who reached out to us struggling with the temporal nature of memory, and thought that a graph database would be better, and then came across Graphiti, and then came across Zep, and now we're talking about pilots with them.

Kenn So: That's exciting.

Daniel Chalef: Yeah.

What's coming next

Kenn So: You're going to be very busy. So what's next for Zep? What can you share about what we should be looking out for?.

Daniel Chalef: There's a lot of work to do when it comes to enterprise adoption. So we're putting a lot of effort into that. We're SOC 2, Type 2 compliant. We have a BYOC offering. And so there's a lot of engineering and infrastructure work that we're spending time on.

That said, we recently rolled out the ability to customize Zep for your domain. And so there's a lot of work going into that. We actually rolled out this past week, the ability to build custom edge types as well.

Kenn So: So you can build custom relationships between nodes now. Interesting.

Daniel Chalef: Yeah. And that's experimental. We're constantly working on latency. Recall latency is down to 200 milliseconds for our hot path, memory.get API. So, we're working with voice agent platforms at the moment on that.

Wearing multiple hats as a founder

Kenn So: We’re almost out of time, any call to action for the readers?

Daniel Chalef: I would just make it checking out Graphiti. A lot of our marketing focus has gone into Graphiti, and Zep kind of comes in as a byproduct. I don't know if you saw my talk last week.

Kenn So: At the AI Engineering conference? I haven’t. I saw you were presenting but I didn't get to attend your talk.

Daniel Chalef: You missed it — the talk was apparently voted best of the GraphRAG track. I have a trophy that I have to go fetch.

Kenn So: Ha, nice. Actually, another (last) thing I really want to ask you is you've worn many different hats, head of marketing, corporate development, founder, engineer. How has that led you to think about how to build Zep?

Daniel Chalef: It's given me pretty deep insight into how to market Zep. I've been close to the go-to-market function, so how to sell Zep as well, what enterprises need. I've worked in—got a lot of enterprise DNA, worked in enterprise software companies, coupled with an ML and engineering background, understanding both how it works, but also what our customers might need and what resonates with them has been very, very effective.

Kenn So: Lots to unpack there and we could go for another 30 minutes. But we can end it here. I really enjoyed the chat. Thanks for taking the time, Daniel.