Briefings highlight generational AI scaleups that dominate their category and startups that are emerging category creators. This briefing is special not only because this is the first Generational company briefing but also because I had a chance to catch up with the project creator & co-founder, Jerry Liu

LlamaIndex (previously known as GPT Index) provides a central interface to connect your LLM’s with external data. LLMs are pre-trained on large amounts of publicly available data but to make it more practical for companies, it has to be augmented with private data. To perform data augmentation in a performant, efficient, and cheap manner, companies have to solve two components: data ingestion and data indexing. That’s where the LlamaIndex comes in. It offers:

Offers data connectors to your existing data sources and data formats (API’s, PDF’s, docs, SQL, etc.)

Provides indices over your unstructured and structured data for use with LLM’s. These indices help to abstract away common boilerplate and pain points for in-context learning:

Provides users an interface to query the index (feed in an input prompt) and obtain a knowledge-augmented output.

Offers you a comprehensive toolset trading off cost and performance.

Why LlamaIndex is a generational company

Solving a real pain point in a fast growing market

One weakness of foundation models is it hallucinates and needs to be pointed to actual data. LlamaIndex provides a solution to integrate, index, and query external data sources

Entrepreneurial and technical founders

Jerry Liu (CEO, creator of the project) graduated from Princeton as the co-president of the Entrepreneurship Club, published research at top AI conferences, and worked at venture-backed startups (Uber, Robust Intelligence)

Simon Suo (co-founder) graduated from University of Toronto and Waterloo, published research at top AI conference, and worked at venture-backed startups (Uber, Waabi)

One of the fastest growing open source projects

The projected had fewer than 700 Github stars at the beginning of January 2023. It grew to 12.5K by the second week of April

Briefing

As foundation models gained popularity in the industry in 2022, Jerry Liu was keen to acquire hands-on experience with generative large language models. He wanted to build a sales bot but had trouble feeding data to GPT-3. “I fed information to GPT-3 but it had limitations on context window. I don’t want to do a fine tuning of the model. I wanted to solve this pain point. How do I store the data separately, learn how to ingest data, and do retrieval.” That experience is what led to the creation of LlamaIndex according to Jerry, creator of the project and co-founder of the company productizing it.

Jerry's interest in generative AI can be traced back several years to his time as an undergraduate at Princeton University, where he first explored generative adversarial networks (GANs) during the initial wave of generative AI in the late 2010s. GANs, which differ in architecture from today's foundation models, are primarily used for visual tasks like image synthesis, data augmentation, and style transfer. This early interest led him to AI research at Uber and a year-long stint as a machine learning engineer at Quora. His research on sensor compression and motion planning was published at top machine learning conferences, including NeurIPS and CVPR.

After 2.5 years at Uber, Jerry decided to shift from AI research to its application in industry. In February 2021, he joined Robust Intelligence, a Sequoia-backed startup that manages AI risk by monitoring and testing machine learning models. There, he led engineering for an AI firewall product designed to vet data before it enters a model.

On November 8, 2022, Jerry launched LlamaIndex via a tweet. Initially starting as a simple tree index for organizing information, LlamaIndex initially saw slow growth because Jerry found “the tree index did not work well in practice”. However, he kept building and evolved the project into a versatile LLM toolkit that supports multiple data modalities and index types. By January 2023, LlamaIndex reached an inflection point and began to see accelerated growth.

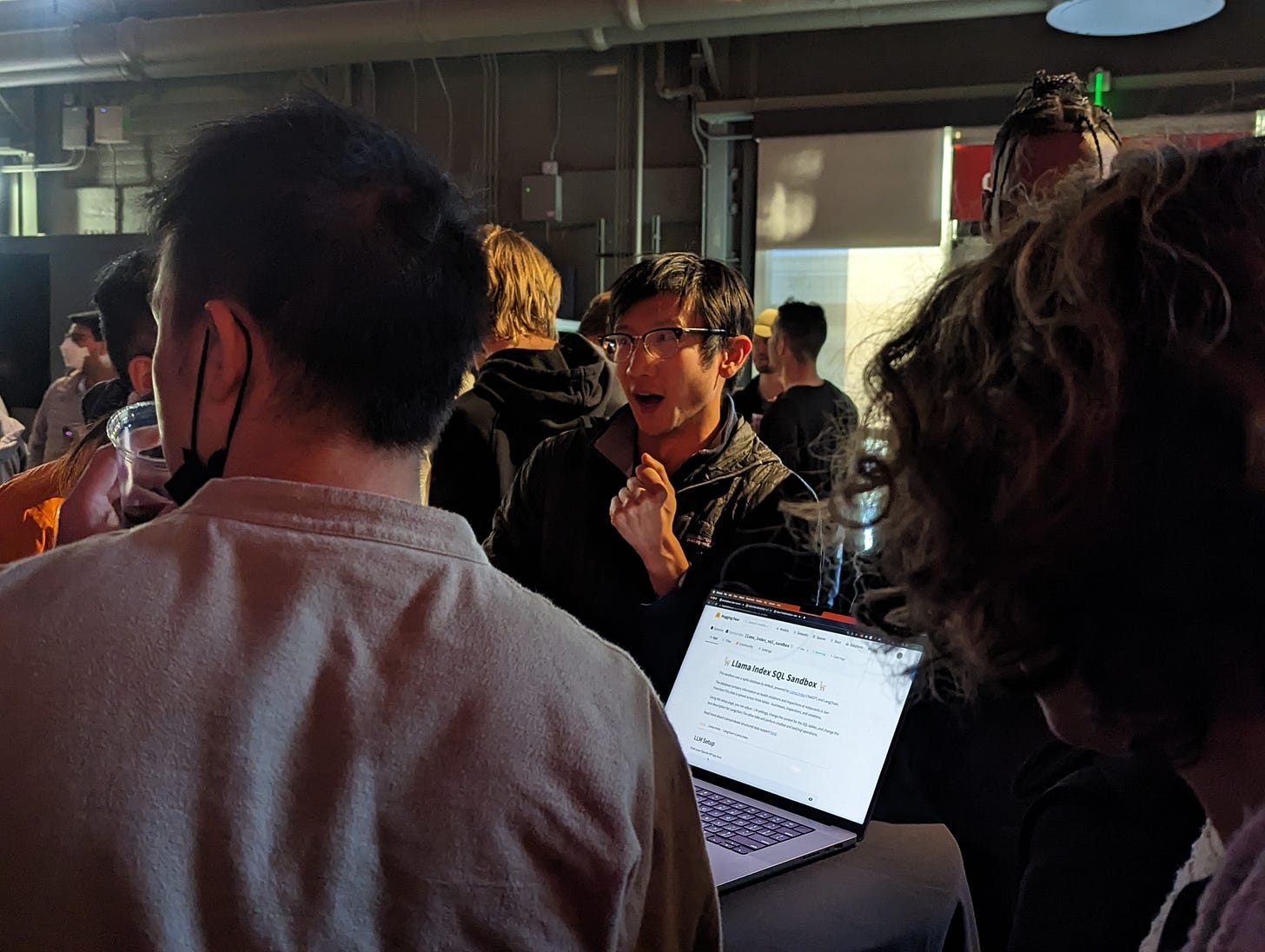

When I caught up with Jerry in March, he was just three weeks into building the project full time. I’m always intrigued to hear why founders decided to start a company, especially when its still fresh in their minds. “Seeing the community adoption by March, I knew I had something going on. And I’ve always wanted to build a company. It goes back to my Entrepreneurship Club days at Princeton.” He was the co-president of Princeton’s entrepreneurship club and led HackPrinceton, one of the largest hackathons with students from dozens of other schools participating. In hindsight, his path from big tech, to startup employee, to founder only seemed natural.

The market has seen a surge of new open-source projects involving foundation models, with SOTA alternatives emerging bi-weekly. Notable among these are the Llama-family of models: Meta's GPT-3 alternative Llama, Stanford's ChatGPT alternative Alpaca, and a cross-university ChatGPT alternative Vicuna. Most projects, however, are alternatives to OpenAI's models. Building an LLM-powered software requires more than just the base model trained on public data. Fetching and transforming private data will make LLM much more useful to companies. That is where LlamaIndex comes in. Jerry envisions it to be “the data management interface for your LLM. The tool to interface with any database, workflows, ETL jobs.” The project is currently focused on textual data, but Jerry has outlined a near-term roadmap for LlamaIndex:

Expanding robust libraries to support other unstructured data like images and structured SQL data.

Improving the ease of use and modularity of indexes to enable more complex indexing.

Optimizing pre-processing of data (e.g., text chunking) and querying (e.g., minimizing token usage).

Over the past few weeks, I have been personally experimenting with LlamaIndex (see personal example below) and participating in the community as a Discord member. Throughout this time, I have increasingly the practicality and versatility that it brings to the table. Developers who want to build LLM-apps and contribute to open source should check out LlamaIndex.

Links

Github page | Documentation | Discord | LlamaIndex Twitter | Jerry’s Twitter

Product notes: Pain point and solution

Indices for unstructured and structured data: Handling large amounts of unstructured and structured data can be challenging when working with LLMs due to context and prompt limitations. Finding the relevant text in arbitrary chunks can be difficult and inefficient.

The status quo today for analyzing documents means chunking the text into pieces, vectorizing each piece, feeding each to a database, vectorizing the user query to find the closest text pieces, and then feeding these as context to the LLM. This works well for short documents and simple questions but struggles with knowledge-heavy queries on long documents, like books, that go beyond the LLM's context window. Non-fiction books generally follow a logical structure, organized into sections, chapters, and paragraphs. To get a clear understanding, readers need to go through the text linearly. Naive chunking ignores this important structure, so developers have to create indexes that fit usage patterns.

Here’s my experience building with LlamaIndex. A friend suggested I check out the book The Power Law by Sebastian Mallaby. It caught my interest, but I didn't want to spend the time reading it from cover to cover. So, I bought a digital copy and fed it into LlamaIndex. The project supports multiple index structures (list, vector, tree, graph, etc.) which can be stacked on top of each other so it supports multiple Q&A and conversational situations. To support chapter-specific questions and summaries, I created a list + vector index for each chapter, allowing the LLM agent to guide questions to retrieve from particular chapter indices. The retrieved text is then fed sequentially to the LLM, making sure the final answer makes sense. In contrast, basic chunking and summarization may appear to be intelligently retrieving text but may end up randomly selecting text from various parts of the book.

Integration with external data sources: Integrating LLMs with different data types can be challenging because it requires users to preprocess and convert data into a format that LLM systems can understand.

For instance, let's say a user wants to create an AI chatbot that answers questions based on their company's internal knowledge base, which includes data in various formats. LlamaIndex simplifies this process with data connectors that handle the integration, allowing users to focus on leveraging the LLM's capabilities for their chatbot instead of dealing with data ingestion issues.

User-friendly querying interface: Querying LLMs with external data can be cumbersome, as it often requires manual crafting of input prompts and managing the output.

Take, for example, a user who wants to use an LLM to generate summaries of news articles based on their organization's collection of news sources. Without LlamaIndex, the user would need to manually craft a context-aware prompt and ensure the generated summary is accurate. With LlamaIndex's querying interface, users can simply input their request, and the system automatically creates the context-aware prompt, manages the LLM interaction, and returns a knowledge-augmented output.

Balancing cost and performance: Finding the right balance between LLM performance and the cost of deployment can be difficult, especially when choosing between advanced models like GPT-4, which are powerful but expensive and slow, and faster, less capable models like GPT-3.5.

Consider a user who wants to build a content generation tool that creates both simple and complex articles on various topics. LlamaIndex provides tools to help users optimize their LLM usage, allowing them to balance performance and cost by selecting the appropriate model for each task. Users can configure their LLM deployment to use the faster GPT-3.5 for simpler content generation and the more powerful GPT-4 for tasks that require advanced understanding and synthesis, ensuring they get the best value for their investment.

Generational is hosting a small group dinner in San Francisco on April 26 at 7PM. This will be a mix of folks building and investing at the forefront of AI. One seat is reserved for (you) readers. Interested in joining this month’s or future dinners? Reach out on LinkedIn or leave a comment.

Special thanks to Specter for supporting Generational’s startup series. When I was still a venture investor, I have tried different systems and even attempted to build my own to help me find the best founders and companies to partner with. I’d say that Specter’s the best. Check them out.

super cool!

I would love to go to the dinner!!!