In this series, I interview people at the forefront of building AI products and whom I think have something special. Through these interviews, I hope to share the hard-won lessons these folks have gotten from doing large-scale deployments. If you enjoyed this interview, who should I interview next?

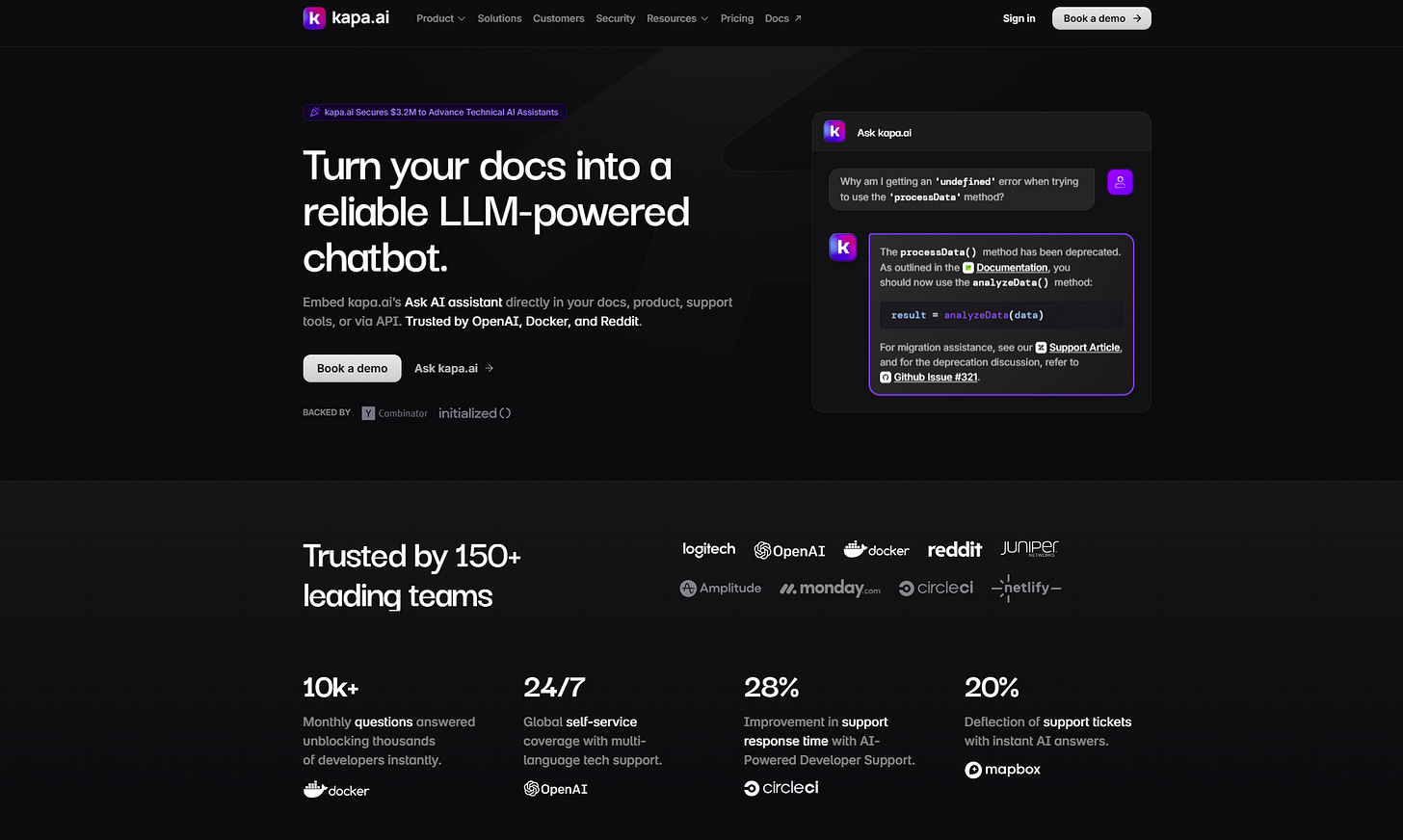

In this interview, I speak with Kapa.ai’s CEO co-founder, Emil Sorensen. Kapa helps companies answer technical product questions from their customers & developers, instantly and accurately. I first met Emil when they were presenting their hack in the early days of 2023, not long after ChatGPT came out. That hack evolved into the company that is now serving 150+ customers including companies with world-class engineers like OpenAI.

Key learnings

Focus Beats Breadth: Emil emphasized the power of staying laser-focused on technical documentation rather than expanding into general-purpose chatbots. This narrow focus allowed Kapa to build deep expertise and better serve their specific audience, competing effectively against broader platforms like Intercom and Zendesk.

Build Your Own Evaluation Framework: One of Kapa's biggest advantages is their comprehensive evaluation suite developed over two years. This "secret sauce" allows them to quickly test new models and features against their specific use case, staying grounded amid AI hype cycles.

The Last 30% is the Hardest: While talented in-house engineers can build a RAG system to 70% quality, the remaining 30% to reach production-quality involves security, rate limiting, front-end interfaces, and operational excellence that require focus and time.

Quality of Data Sources Determines Everything: The biggest determinant of answer quality isn't the underlying model but the quality of documentation and data sources. Kapa turns away customers whose documentation isn't ready.

"I Don't Know" is a Feature, Not a Bug: Users highly value when AI systems admit uncertainty rather than hallucinating answers. This builds trust and makes the system more reliable for technical use cases.

Small Teams Can Build Big Things: With just 15 people, Kapa serves 150+ companies and has answered 5+ million questions. The key is finding talented people who thrive in high-impact, chaotic startup environments.

There's so much more to learn and unpack from my chat with Emil. Read on below to see what's next.

Kenn So: Thanks for taking your time, Emil. This is two years in the making, so I'm excited we are here today. For the audience, let's start with the usual - what is Kapa? What's the origin story? How did you get to start Kapa and what's the journey been so far?

Emil Sorensen: At a high level, Kapa is a platform that makes it really easy for technical companies to build RAG assistants and deploy them where their users or employees have questions. What this looks like in a lot of cases - take someone like Docker that uses Kapa - is an AI system that lives on their documentation. For other folks like OpenAI, it might mean they have an active Discord community, so a version of Kapa lives in there to help their end users navigate the thousands of pages of content that the OpenAI team produces.

Now, two years in, Kapa is used by more than 150 companies, has answered more than 5 million technical questions, with lots of cool companies like Reddit, Docker, OpenAI all using Kapa.

Kenn So: Super exciting to hear 150+. And you've got some of the most technically minded companies who are at the AI frontier using Kapa. Was that how you envisioned it right from the very start, or how did it evolve over time?

Emil Sorensen: One thing I don't believe in a ton is this concept of a founder waking up one morning and seeing the light and coming up with a mission. I'm much more in the school that it's exciting to work on stuff that's used by people.

It's a good bridge to maybe the founding story, which is actually pretty straightforward. Finn and I are very close friends. We both met in London for our first Master's degree, thinking we would go study finance at LSE, which we did. Fortunately, just before that I'd spent a summer in San Francisco interning at a startup. Still no idea how I got the gig, but I was working as a self-taught engineer writing some terrible code.

I came back and met Finn in the line for picking up our student cards. I was telling Finn about this startup experience, and he was like, "Oh wow, that sounds awesome!" Our friendship started there. We got excited about all this stuff and looked at each other a couple of weeks into this program going, "What are we doing trying to be bankers in London? This is a horrible idea." But we saw the degree through and in parallel convinced ourselves to go study computer science instead when we were done.

We went to do a conversion master's in computer science, which apparently in London you can just study for a year.

We didn't have any ideas when we were done with our degrees in 2019, but around that time we'd been tinkering with embedding models and fine-tuning early GPT versions to try to write abstracts. None of it worked, but our intro to deep learning class, they used OpenAI's reinforcement learning work - I think it was Dota where they showed how you could train models. We were looking around this stuff, and they were teaching us how to write basic neural nets from scratch. The attention paper was coming out around this time, but there wasn't much to it.

Kenn So: So early though, 2019. GPT-3 might still be training then and only a few people know about it. How did you kind of get into those early explorations?

Emil Sorensen: It was just around this time that the attention paper was coming out. There was a little bit of chatter around this, but the only motivation we had for tinkering with this was maybe it could write our abstract, which it couldn't. It was horrible. And then we moved past it.

Kenn So: How'd you go from that to McKinsey?

Emil Sorensen: Very personal reasons. At the time I was living in London, but my girlfriend, now wife and soon to be mother of our first child, lived in Copenhagen. If you're kind of ambitious in Copenhagen, there isn't this big scene of cool tech companies to go work for - you go work for McKinsey. As a lack of inspiration, I would have started a startup then, but Finn and I didn't have any good ideas. So he went to work as an engineer for a couple of years while I was at McKinsey.

Kenn So: What then led you to go from McKinsey to deciding to start Kapa?

Emil Sorensen: For a while Finn and I - because I was at McKinsey for three years before we quit - we really tried actively on weekends and early mornings to think about what startup we wanted to build. We wanted to try to build something together, but it was like, "What are we going to build? We should do some customer interviews." But when you're working 80-hour weeks flying everywhere as a consultant, you just don't have time for this.

We met over summer in Austria to go mountain biking for a week together, and around then Finn started flirting with the idea that maybe we should just quit and give ourselves a bit of time. We left that vacation going, "Maybe quitting first of December doesn't sound too bad." We said, "You know what, it's now or never. We're in our late twenties. If we don't do this now, it's going to be way harder." And then we just quit.

We showed up and started brainstorming mid-January. We bought a whiteboard, and the day after we bought that whiteboard - timeline context, this was mid-January, and by early December ChatGPT had launched. Ada embeddings came out December 13th or 14th. Around this time we were reading Hacker News, and people were beginning to say you could finally build these systems. They didn't call them RAGs then, but these systems where you pull data back from a database. Super lucky with the timing, to be honest.

The day after we bought a whiteboard and started brainstorming without any ideas, we were really lucky to have two friends that each had co-founded dev tools. One was the guy we met while studying computer science in London - he had a YC-backed computer vision company. Another friend, Nick, here in Copenhagen who I worked with at McKinsey, co-founded Medusa.js, a big headless commerce platform with tens of thousands of Github stars.

Both of them called us on the same Tuesday saying, "Hey guys, we know you quit, you're looking for ideas." Both were complaining about the same thing: their engineers were spending so much time on Discord and Slack communities answering technical questions that maybe with this new ChatGPT stuff might be solvable now. "Could you guys try ramming our documentation and some GitHub issues into some sort of assistant?"

We spent time building a prototype and did that. That's the origin story.

Kenn So: Such an amazing founding story. So Kapa started there, then you went through YC two years ago. There's so much to unpack in between then and now. Maybe let's start with the product. How has the product evolved?

Emil Sorensen: The interesting thing is at its core, not much has changed. We still try to do one thing really well, which is: you give us all your technical content - all your developer documentation, all your API references, all your GitHub issues. And we'll do the best job possible of letting you ask questions about that and generating an answer.

Whether that's using RAG, whether that's using some reasoning-based approach, whether that's using a Claude model or an OpenAI model, or open source or fine-tuned model - we don't really care. All we care about is whatever does the best in our evaluations, and then we'll ship that. We initially struggled with what to call this, but we've settled on "answer engine" as a concept. Question comes in, answer comes out. Our users don't really care what we do under the hood.

That's our North Star. Of course there's been a lot that's happened since then from a product perspective - integrations, platform features, all this stuff - but really at its core, our job is just to give really accurate answers based on the content you give us.

Kenn So: Having interacted with Kapa since the early days of the industry, its fun when I still encounter it in new AI projects I am working. It's still the same experience on Discord, still gives you accurate answers, still says "I don't know" if it really doesn't know. But I am sure a lot has changed under the hood for the past two years. What were the major developments in terms of what made your product harder to build, or maybe made it easier?

Emil Sorensen: Let me break it down into interesting data sources, interesting integrations, and then interesting approaches to the actual models themselves.

From a model perspective, without a doubt, the models themselves getting better is definitely a big improvement for Kapa. Initially, I heard "GPT wrapper" so many times, but that meme is finally starting to decline as people see this is just another piece of infrastructure you need to build on top of and tame, much like you do with a Postgres database.

The tricky part to navigate is, if you're very deep on Hacker News or Twitter, you will freak out as a founder in this space if you don't have anything to evaluate these things against. For us the biggest lift was very early on making a bet that the secret sauce for a company like Kapa is our approach to evaluations.

Building trust in our own eval set so we can constantly, whenever people are panicking about DeepSeek or seeing that Anthropic is releasing a new citations API, very quickly test that for our use case to see if this is actually helpful or not. One thing we really care about is a model's ability to say "I don't know."

Kenn So: That's really interesting. Are there specific instances where evals kept you grounded? Were there instances where you saw the hype was real versus ones that were duds?

Emil Sorensen: The Anthropic team really figured something out with Sonnet 3.5, that was very good. To lift the lid on what's very top of mind for us now, GPT-4.1 is a phenomenal model, but it takes a lot of taming to get it right. For grounded RAG use cases, it's great.

Some very hyped things have been open source models. And it's a bummer as an engineer, you want to see open source catch up. But they've just constantly lagged behind, at least on our evals, by a year to year and a half in terms of performance.

Kenn So: Yeah, evals really help you keep grounded amidst all the hype. If you don't have a particular use case, it's really hard to know what you're going to ground yourself in as a general bot.

Emil Sorensen: Exactly right. Today, when someone like a larger technical enterprise evaluates Kapa, usually the main competition we're up against is a crack team of really good engineers internally that might go, "Hey, it makes sense for us to build some capabilities in-house around this."

We've been able to crowdsource our evaluation suite over close to two years to understand how a model like Kapa is supposed to behave.

It's one of these things where it's very easy to build a prototype that gets you 70% of the way there. It's very hard to get that last 30% to get it to a point where you feel comfortable putting it into production.

Kenn So: That's a point I want to unpack later - you are up against crack engineers who could probably put a RAG model against their data sources. But let's go back to earlier topics. You mentioned data sources and integrations. Maybe we could go there next.

Emil Sorensen: If you think about Kapa, it's data sources in, question comes in, answer comes out. One of the things you can play with is the actual model and how a question comes in and retrieval generation. The other thing you can do is think about data sources.

A good example here is something like a PDF. We work with a bunch of semiconductor companies and all their documentation lives in data sheets and PDFs. Horrendous data format. We've tried now three or four iterations over the last two years of building a PDF converter that we were satisfied enough with the quality to fold into our product.

We've tried coming back to this every half a year, and it's only now in May 2025 that it's gotten to a point where combining OCR and VLMs has finally gotten good enough that we're like, "Okay, this thing we've been working on for two or three months to ingest PDFs is finally good enough to make its way into Kapa's integration."

The reason I say that is because we care about very specific things. Unlike a general system, we care about ability to cite, ability to preserve hierarchy within a big document. Some of these data sheets are hundreds of pages. Once you have folks you're working with, it's easy to go find examples and build a specific eval suite and work against that to build your very niche integration.

It's that story time and time again - how do you think about GitHub? How do you think about Zendesk support tickets? How do you think about all these other technical sources? Which is cool because we can afford to do that because we're so focused on just working with technical companies. But if we positioned ourselves as a general-purpose chatbot that's also supposed to do support for e-commerce companies, there's no way we can invest this amount of time into parsing through and structuring 100+ page long technical PDFs.

Kenn So: That's a good segue to the point of you being up against world-class engineers who can probably do 70-80% RAG off the bat. What's that 10-20% that you're so focused on? What makes it really hard and tricky that you're focusing on?

Emil Sorensen: I don't think there's any big secrets other than it's just the engineering slog around it. What we often see is a team of really good engineers can definitely build something with pretty good answer quality. Sure, we can poke at edge cases where Kapa is better, but very quickly, this team will have spent half a year building something like this.

Then they realize, "Oh, at some point you also have to take this to production. How do people want to consume this?" They want to consume this as a chat widget on your website. Well, do you also have a bunch of front-end engineers who are ready to build that? How are you thinking about security? You can't just expose your chatbot for anyone to inspect the network request because they can hammer it with a million requests and bankrupt you overnight. You have to think about proxies and rate limiting and reCAPTCHA.

There's all this other stuff that becomes table stakes. To be honest, I'll try to check my own bias, but if all you're trying to do is build a system that's able to answer questions about your product that has access to your docs, it probably doesn't make sense to use your very expensive engineers that are able to build large language models to be building that.

Instead, what you should do - and we can talk about a few examples - is do what a company like Docker does. They have some really talented, very capable engineers that are thinking about how to build Docker support for MCP servers. Go do that! That's really good. Or how do you build a Docker Desktop AI assistant that can look at your docker-compose file and take action?

What they do is say, "Well, we still want this exposed as a chat interface, but we don't want to build and maintain something like Kapa. Let's just use Kapa as a tool call in that case." That's the product approach which is just part of a stack. For most companies, that's probably the path to go.

Kenn So: When you work with customers, what does onboarding look like? Is there any sort of fine-tuning or evaluation that you do for each customer? I'm curious because even within technical companies, there's a wide range from hardware to software. Even within software, you have map APIs versus JavaScript front-end types. How do those change how your product is experienced?

Emil Sorensen: On the onboarding aspect, that's really company dependent. We let folks self-onboard that are very capable and really want to do that. We also lean in a lot because one thing we've realized is how you set up an instance of Kapa - it's not obvious. Just to say, "Do you want to let us slurp up all your GitHub issues?" We've seen a lot of cases now, so we like to share that advice and help folks make sure they get everything configured correctly, because that is the biggest determinant at this point of the quality of answers you'll be getting.

That also answers the second question a bit, which is we try to make Kapa as general-purpose for technical questions as possible. How it performs - by this point, wasn't the case two years ago, but by this point the biggest determinant of that quality is really the quality of data sources.

Unfortunately, just before this I was talking to a customer who decided not to move forward with Kapa, and they were very honest. They said, "We thought we were at a place now with our documentation where this could be helpful enough to answer questions, but we need to come back in a couple of months. We have some work to do on our documentation. It just doesn't cover what our users want to ask."

We try to build some of this into the product to make it easier to find these gaps, but that really is super important.

Kenn So: How do you assess if something's good enough from a documentation perspective, from a data source perspective?

Emil Sorensen: By now, the whole Kapa team can take a look at a set of docs pretty quickly and just kind of sniff it out. But the TLDR is, you don't really know before you try it.

Kenn So: You mentioned trying to help fill that gap for your customers - "Hey, this is probably an area where you need to fill up the docs or some data sources." Hey, this is a good segue into the different modules and maybe vision into what Kapa could be. Because you can do so much with the data you have. You should be able to say, "Based on the questions, this is an API doc area where you need to fill out, or this is where you have the most pain points in terms of which endpoints." I'm curious - you have so much you could extract from the data. What's next?

Emil Sorensen: That's a great question. The short answer is whatever is important to the company that's using Kapa. For most folks, the first thing they get started with from an analytics perspective is starting to build some workflow habits around just on a weekly or monthly basis plugging some holes in their docs.

We try to design the product to lend itself to those workflows so they can go, "Oh yeah, seems like May 2025 is the time I focus on my getting started guide because a lot of folks seem to be struggling with that, or maybe it's time to focus on my React SDK docs because there's really high uncertainty in Kapa's ability to answer questions around this topic" - it says "I don't know" a lot.

For other teams, they take it a bit more at a strategic level, so they'll use it more as a tool to say, "Hey, we're thinking about launching on-prem finally. Do we have any data to support that users are increasingly interested in this or less?" It can also become more of a research tool.

But to be honest, this analytics thing on unstructured data produced by LLMs - that's still a tricky problem to solve. I don't think we've completely cracked the right approach yet.

Kenn So: This is a question I should have asked at the top of the interview - who are you primarily selling to? Which personas? How has it evolved? I imagine it's engineers at first, and now maybe for more mature teams, you have developer experience teams.

Emil Sorensen: You nailed it. That's pretty much it. There are some slight variations. Usually the core unifying thing is it's whoever owns and cares about onboarding documentation, customer education. Turns out this title usually is different from company to company. It's not super unified, but developer experience, head of documentation, technical writer, that kind of stuff.

Kenn So: I'm curious what you've learned from watching them use your product. Has it changed, or is it still fundamentally the same? I know they're a little bit more strict and critical when it comes to the output and will point out inaccuracies more.

Emil Sorensen: That's a good question. Generally, this is just a complete mega trend now of people adopting LLMs into their workflow. Obviously I'm biased because the people I talk to use these in the context of Kapa but folks have multiple tools like ChatGPT, Gemini, and Claude open, and they each have a different mental model of who they like. Claude's ability to write emails, but ChatGPT's a little bit better at meeting notes and so on. So everyone's more comfortable with it.

That's a misconception a lot of companies have when they think about rolling out a system like this - "Oh, people aren't comfortable with LLMs. What if it hallucinates?" Because no system is perfect. But what's the alternative?

A really interesting insight is people really like it when the bot just admits defeat and says, "I don't know." This is one of these things that by sheer luck in prompting that first week or two, pre-launching Discord deployment, whoever stayed up late that night and wrote the first couple of meta prompts had this over-indexed on "I don't know." But that's really just a core product feature since.

Kenn So: It is such a different experience back then of models saying they don't know, because everyone's trying to get the models to do everything and anything, and there was a lot of hype. That was my experience as well. I built a POC internally, and the first thing leaders tried to test was like, "Can it say I don't know, or I can't answer that?" It's a user trust feature that's more subtle.

Maybe we can shift a little bit into building. Any guidance or tactics and insights about how you were able to grab all those amazing 150+ customers, including some of the best ones? I know one tactic I really love, even from two years ago, is you build demo chatbots for prospective customers. That was really impressive.

Emil Sorensen: With the Langchain example, Harrison at the time - obviously we were all in the Langchain Discord and following what's going on and using Langchain first and all this stuff. Early on it's like, "Hey, this seems like a really helpful thing. Let me just send something over to Harrison." He's like, "Yeah, that's cool. Let's deploy it." That definitely helped.

Other things that have really helped - it's been really, really narrow in our focus. A lot of people right now - which I get is natural - think about, "Well, okay, Zendesk has this AI offering and Intercom has this." And they're fantastic. Intercom is fantastic, Zendesk maybe less so. But these systems have to cater to such broad audiences, whereas we really speak to the persona that uses Kapa, which is one that cares about technical documentation.

That has really helped us to also when someone's saying, "I'm not trying to solve your support team's problem or McDonald's support chatbot problem" - some other smart folks are doing that. And they're very different LLM problems you have to solve for that. What I'm trying to do is this.

Kenn So: Okay, maybe since we're talking about how to get customers, shifting gears again into building your team. I'd love to just talk about how you're building your team right now in terms of the skills that you're looking for and any philosophies you're applying to it. Is there anything specifically for AI startups?

Emil Sorensen: It's not going to be anything super novel. We're just married to the YC playbook, to be honest. In terms of hiring, it's very merit-based. It's very big on cultural fit. Startups are not for everyone. The biggest learning here is really for both sides when evaluating whether or not to join a startup like Kapa.

We have people come in and work with us for a couple of days, and we pay them a bunch to do that, which is definitely not the norm here in Europe where we're mostly hiring out of. Just to say, "Hey, this is going to be more chaotic than your job at Google. The amount of impact you can have will be 100x outsized compared to what you're able to do now." Some people just thrive in that context, and other people just like going back to their eight weeks of vacation a year here in Europe and 37-hour work weeks.

That's been a really important element too. In terms of AI focus, maybe an interesting thing from an engineering perspective is you have a bit of a split in terms of engineering preference. Most of our engineers - all of our engineers are phenomenal. They're really very high - I'm sure every founder will say this - but very high talent density team. But we have a couple of guys who just hate dealing with LLMs because of their non-deterministic nature and would get so much more joy shipping a new integration or shipping a new feature on the platform because you see the output, whereas other folks that are more research-minded just love wrangling in this nasty LLM space and can easily spend one or two weeks and don't get deterred when they don't see scores moving up or something like that.

Kenn So: That's so interesting. And then, how big is the team now?

Emil Sorensen: About 15, some part-timers here and there.

Kenn So: That's a lot of customers for 15 people. I know it's software, so there's that ratio thing. But it feels like you've gotten so much done with 15 people. Was keeping it lean kind of intentional? Because you've also raised venture funding, so you have the money to continually hire and potentially drive growth.

Emil Sorensen: I don't know, maybe let's see, the jury's out, maybe I'm completely wrong here - but I don't think more people solve startup problems, at least not necessarily in the AI space. You're seeing that proven out with the recent Windsurf acquisition and all this stuff. What you really need is just a team that communicates very well across the commercial side that's getting constant product input and the engineering side that's building stuff.

Kenn So: I think so. But at some point, you also need to add more people because there's company stuff that you need to keep track of things. Maybe that's a good segue into what's next for Kapa, for you and Kapa. 150+ customers, 15 people, really great customer logos. What's next?

Emil Sorensen: We hold ourselves to very ambitious goals, very high growth rates. So it's just continuing to deliver on those and doing whatever is rational to keep moving in that direction.

It's not the most exciting thing, but it's really just keep making sure Kapa is the best version of what it is, which is a thing that answers questions about your products, and spending a ton of time doing customer interviews to understand as these models get better every week, what new points of value we can essentially build around that to keep making Kapa better.

Kenn So: Any sort of preview you can share an area you’re going to expand to next?

Emil Sorensen: There's always the question around how much do you lean into something like support. That's still very open-ended because that's also a freaking rabbit hole to lean into.

There's a question around the underlying paradigms. The amount of times I've heard people say "RAG is dead" and it turned out to be false, so I'm not going to say that. But I'm very interested to see how paradigms are starting to change. We published a bit of research back in January and February, very publicly talking about our experiments using reasoning models combined with RAG that had some really interesting results, but was at a point where the models we were using just weren't experienced enough with tool calling to consistently call tools at the right time. It's changed very fast.

So that's the space we're looking very much into. But other than that, it's really just helping people that want to build product assistants do that in a really good way.

Kenn So: Okay, last part. How are you using AI personally?

Emil Sorensen: The most personal anecdote is probably for the first time since starting up two years ago - not a very inspirational thing to say - but I finally took some time off with my wife. We're about to have a baby in two or three months, our first kid, which I'm very excited for. There are lots of founder thoughts like how do you balance building a company and being a good dad.

Part of that is we wanted to take some time away. So we did a nice road trip a couple of weeks ago for a week, and Claude's advanced research was phenomenal for that. Very vanilla use case, but that's crazy.

Kenn So: I really like the latency when using something like that too. It makes me feel that the output is earned, that I have to sit and wait for like 10 minutes.

Emil Sorensen: That's a great point. It feels like so much of our experience is we expect immediacy, but the research forces you to kind of wait for that output. It's a little bit like photographing with film - you have to be a little bit more intentional with every shot.

Kenn So: Yes, it's similar to the Starbucks story of customers don't like it when the coffee is instantly available. They like to see grind the coffee and pull out the steamer, even though the taste is exactly the same.

Emil Sorensen: Yeah, pretty much.

Kenn So: Awesome. Let’s wrap here. Emil, thanks again for the time!

Emil Sorensen: This was great. Thanks for having me.